Regulating AI Should be Joint Endeavor

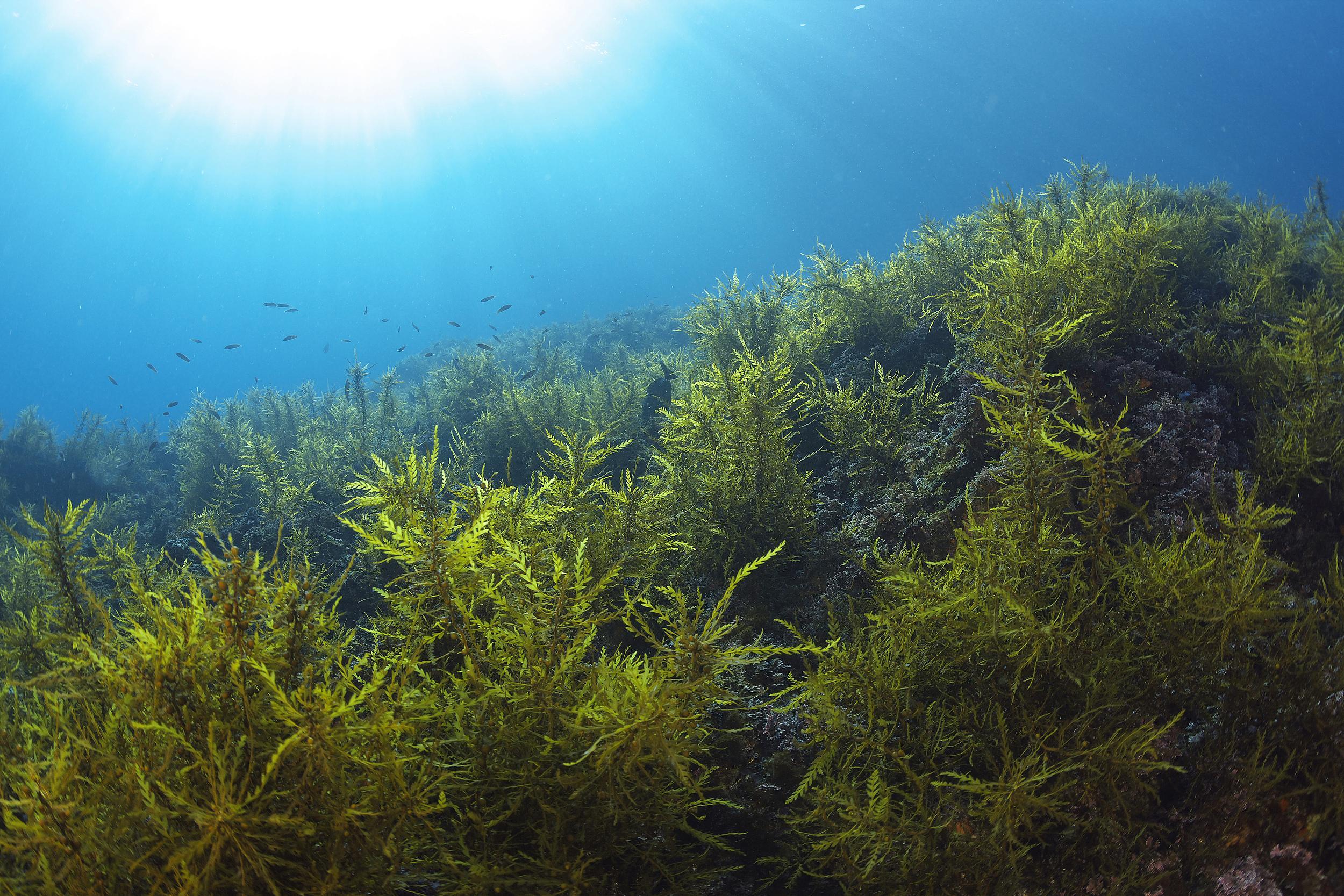

PHOTO:?XINHUA

By?Staff?Reporters

Regulations on AI are now firmly on the global agenda. On May 21, 16 companies from different countries and regions, such as reputable AI-tech giants OpenAI, Google, Amazon and Microsoft, signed the Frontier AI Safety Commitments at the AI Seoul Summit. Combined with this is the EU AI Act, approved on the same day.

Since the end of 2022, access to ChatGPT has increased public awareness of the growing risks surrounding advanced AI systems, which may challenge many norms of human life.

Generally speaking, AI runs the risk of perpetuating discrimination, and distributing misinformation, as well as exposing sensitive personal information as indicated by an event which shows that a training data extraction process conducted by GPT-2 provided personally identifiable information, including phone numbers and email addresses, which were published online.

Large language models faithfully mirror language found in the training data. This training data may come from various sources such as online books or internet forums.

The incapacity to speak like humans, who usually take into account multiple societal-personal factors such as listeners' emotional responses, social impact, and ideological attitudes, may lead to aggravated discrimination against certain groups of people. This is sufficient to surmise that the quality of AI training data cannot be fully guaranteed.

AI-induced misinformation may get worse. Unlike discriminative attitudes, which in some cases are developed unconsciously over time by assimilating information without careful reflection, misinformation is false information that is spread, regardless of whether there is intent to mislead, eroding societal trust in shared information.

As for regulations, from a government perspective, they need to establish agile AI regulatory agencies and provide them with adequate funding. The annual budget for the AI Safety Research Institute in the United States is currently $10 million, which may sound substantial, but actually pales in comparison to the $6.7 billion budget of the U.S. Food and Drug Administration.

Countries across the globe should now establish laws not only based on their existing legal systems, but also seek viable approaches to cooperation. The AI field requires stricter risk assessments and the implementation of enforceable measures, rather than relying on vague model evaluations. Meanwhile, AI development companies should be required to prioritize safety and demonstrate that their systems will not cause harm. In a nutshell, AI developers must take responsibility for ensuring the safety of their technologies.

The international community has now decided that comprehensive regulation of AI needs to be accelerated. It needs to be a united effort, and every company involved in AI development must commit to upholding these standards to protect societal values and trust. Only through vigilant regulation and cooperation can we harness the full potential of AI while mitigating its risks.